Last week we published part one of our five-part Amazon’s Elastic Kubernetes Service (EKS) security blog series discussing how to securely design your EKS clusters. This blog post expands on the EKS cluster security discussion and identifies security best practices for your critical cluster add-ons.

EKS leaves the task of installing and managing most AWS service integrations and common Kubernetes extensions to the user. These optional features–often called add-ons–require heightened privileges or present other challenges addressed below.

Don’t Install the Kubernetes Dashboard

Why: While the venerable Kubernetes Dashboard is still a popular web UI alternative to kubectl, its lack of security features makes it a potentially huge risk for cluster security breaches for several reasons.

- It requires cluster admin RBAC permissions to function properly. Even if it has only been granted read permissions, the dashboard still shares information that most workloads in the cluster should not need to access. Users who need to access the information should be using their own RBAC credentials and permissions.

- The installation recommended in the EKS docs tells users to authenticate when connecting to the dashboard by fetching the authentication token for the dashboard’s cluster service account, which, again, may have cluster-admin privileges. That means a service account token with full cluster privileges and whose use cannot be traced to a human is now floating around outside the cluster.

- Even if users set up another authentication method, like putting a password-protected reverse proxy in front of the dashboard, the service has no method to customize authorization per user.

- Everyone who can access the dashboard can make any queries or changes permitted by the service’s RBAC role. Even with a role binding that grants read-only permissions for all Kubernetes resources, users could still read all the cluster secrets. Tesla’s AWS account credentials were stolen and exploited exactly this way.

- The Kubernetes Dashboard has been the subject of a number of CVEs. Because of its access to the cluster’s Kubernetes API and its lack of internal controls, vulnerabilities can be extremely dangerous.

What to do: Don’t install the Kubernetes Dashboard.

AWS Fargate for Nodeless EKS

Why: Many EKS users were excited when AWS introduced the ability to run EKS pods on the “serverless” Fargate service. Using Fargate reduces the number of nodes that users need to manage, which, as we have seen, has a fair amount of operational overhead for the user. It also handles on-demand, temporary capacity for fluctuating workloads. However, there are some serious drawbacks to using Fargate with EKS, both operational and for workload security.

- Kubernetes network policies silently have no effect on pods assigned to Fargate nodes. Daemon sets, which put a pod for a service on each node, cannot place pods on the Fargate virtual nodes. Even if Calico could run as a sidecar in a pod, it would not have permission to manage the pod’s routing, which requires root privileges. Fargate only allows unprivileged containers.

- Active security monitoring of a container’s actions on Fargate becomes difficult or nearly impossible.

- Any metrics or log collectors that a user may normally run as a cluster daemon set will also have to be converted to sidecars, if possible.

- EKS still requires clusters that use Fargate for all their pod scheduling to have at least one node.

- The exact security implications and vulnerabilities of running EKS pods on Fargate remain unknown for now.

What to do: At this time, even AWS does not recommend running sensitive workloads on Fargate. For users who have variable loads, the Kubernetes cluster autoscaler can manage creating and terminating nodes as needed based on the cluster’s current capacity requirements.

Amazon EFS CSI Driver

Why: The EFS (Elastic File System) CSI (Container Storage Interface) allows users to create Kubernetes persistent volumes from EFS file systems. The Amazon EFS CSI Driver enables the use of EFS file systems as persistent volumes in EKS clusters.

EFS file systems are created with a top-level directory only writable by the root user. Only a root user can chown (change ownership) of its own directory, but allowing a container in EKS to use the file system by running as root creates extremely serious security risks. Running containers only as unprivileged users provides one of the most important protections for the safety of your cluster.

What to do: Currently the EFS CSI driver cannot actually create EFS file systems. It can only make existing file systems available to an EKS cluster as persistent volumes. Make changing the permissions or ownership of the top-level directory part of the standard procedure for creating EFS file systems for use by the driver.

As an alternative, you can add an init container which does have root privileges to chown the filesystem to the runtime user. When a pod has an init container, the main containers will not run until the init container has exited successfully. This method is less secure overall, although the risk of exploitation of the init container running as root is slightly decreased due to the short lifespan of init containers. An example method could be by adding a block like the following to your PodSpec:

initContainers:

- name: chown-efs

# Update image to current release without using latest tag

image: busybox:1.31.1

# Put your application container's UID and EFS mount point here

command: ['sh', '-c', '/bin/chown 1337 /my/efs/mount']

securityContext:

# We're running as root, so add some protection

readOnlyRootFilesystem: true

volumeMounts:

- name: efs-volume

mountPath: /my/efs/mountThen make sure to add runAsUser: 1337 (or whatever UID you chose) to the pod-level securityContext.

Protecting EC2 Instance Metadata Credentials and Securing Privileged Workloads

Why: The EKS documentation provides instructions for installing a number of Kubernetes services and controllers into an EKS cluster. Many of these add-ons are widely used in the Kubernetes community, including the kube-metrics service, which collects key resource usage numbers from running containers and is a required dependency of the horizontal pod autoscaler (HPA); the cluster autoscaler, which creates and terminates nodes based on cluster load; and Prometheus for collecting custom application metrics.

Some of these popular components need to interact with AWS service APIs, which requires AWS IAM credentials. The EKS documentation for installing and configuring some of these services does not always address or recommend secure methods for keeping these often-critical deployments and their IAM credentials safely isolated from user workloads.

Add-ons covered in the EKS documentation that require IAM credentials include the cluster autoscaler, the Amazon EBS CSI driver, the AWS App Mesh controller, and the ALB ingress controller. Other third-party Kubernetes controllers, including ingress controllers, container storage interface (CSI) drivers, and secrets management interfaces, may also need the ability to manage AWS resources.

Some of these add-ons which serve important functions for management and maintenance of the cluster, like the cluster autoscaler, and which tend to be standard options on other managed Kubernetes offerings, would normally run on master nodes, which generally allow only pods for critical system services. For managed Kubernetes services, the cloud provider would generally install the service on the control plane and the user would only need to configure the runtime behavior. Because EKS will not install or manage these services, not only does that work fall on users who need them, the user also has to take extra measures to lock down these services because they are not in their normally isolated environment.

In addition to issues around managing the method used to deliver the IAM credentials to the component pods, all too often, rather than strictly following the principle of least privilege, the recommended IAM policies for these services are excessive or not targeted to the specific cluster resources that the component needs to manage.

What to do: Locking down these critical, privileged components requires addressing several different areas of concern. Below, we describe some of the general considerations for managing IAM credentials for cluster workloads. At the end of this section, we give step-by-step instructions for locking down privileged add-ons and isolating their IAM credentials.

IAM Policies and the Principle of Least Privilege

Carefully evaluating the recommended IAM policies for opportunities to limit the scope to the resources actually needed by the cluster, as well as protecting the IAM credentials tied to these policies, becomes critical to reducing the risk of the exploitation of the access key or token and to limiting the potential for damage if the credentials do get compromised.

Privileges for many AWS API actions can be scoped to a specific resource or set of resources by using the IAM policy Condition element. In the section on Cluster Design, we recommended tagging all your cluster resources with unique tags for each cluster, largely because tag values can help limit IAM permission grants. Some AWS API actions do not support evaluating access by tags, but those actions tend to be read-only calls like Describe* (list). Write actions, which can modify, create, or destroy resources, usually support conditional scoping.

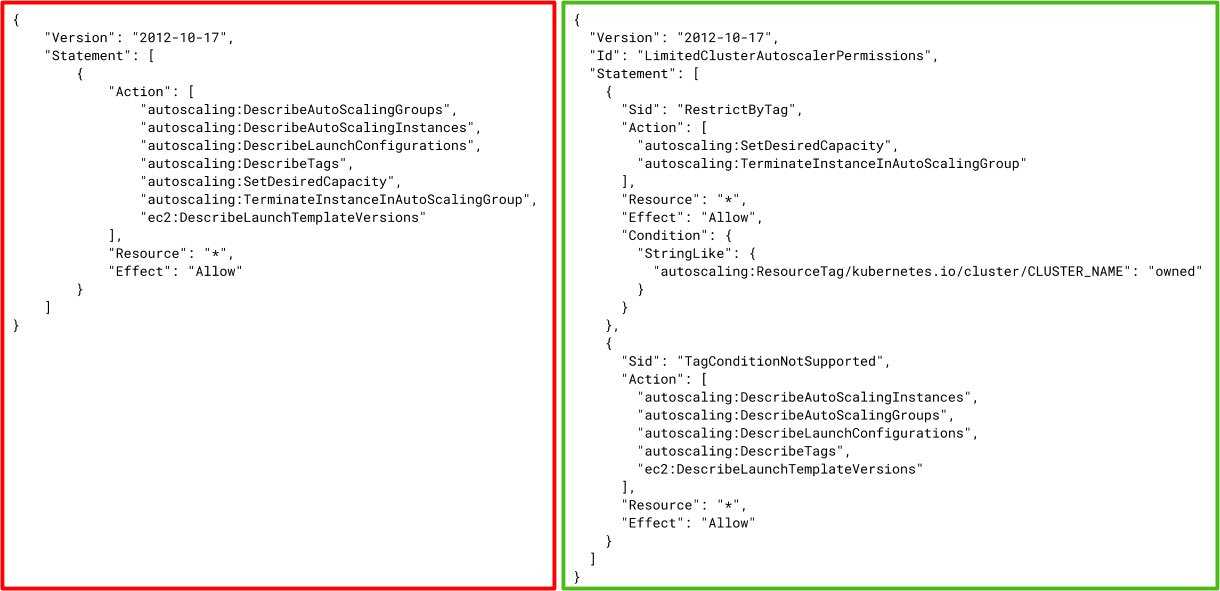

For example, compare the suggested policy for the cluster autoscaler on the left with the policy on the right, which scopes write actions to resources with a specific cluster’s tag.

Isolating Critical Cluster Workloads

As mentioned above, controllers that play a role in managing the cluster itself normally would get deployed to the master nodes or control plane of a cluster, out of reach of user workloads on the worker nodes. For managed services like EKS, unless the provider offers the option to manage the add-on itself, the user can only install these components on worker nodes.

We can still add a measure of security by mimicking to a degree the isolation of master nodes by creating a separate node group dedicated to critical workloads. By using node taints, we can restrict which pods can get scheduled on a node. (Note that any pod can still get scheduled on a node with a taint if they add the correct toleration. An admission controller like Open Policy Agent Gatekeeper should be used to prevent user pods from using unauthorized tolerations.)

Manage IAM Credentials for Pods

A common method to deliver AWS IAM credentials to single-tenant EC2 instances uses the EC2 metadata endpoint and EC2 instance profiles, which are associated with an IAM role. One of the metadata endpoint’s paths, /iam/security-credentials, returns temporary IAM credentials associated with the instance’s role. Kubernetes nodes, however, are very much multi-tenant. Even if all the workloads on your nodes are the applications you deployed, the mix of workloads provides more opportunities that an attacker can try to exploit to get access. Even if you wanted the pods of one deployment to share the instance’s credentials and IAM permissions, which still is not a good idea, you would have to prevent all the other pods on the node from doing the same. You also do not want to create static IAM access keys for the pods and deliver them as a Kubernetes secret as an alternative. Some options exist for managing pod IAM tokens and protecting the credentials belonging to the instance.

For the cluster deployments that do need IAM credentials, a few options exist.

- AWS offers a way to integrate IAM roles with cluster service accounts. This method requires configuring an external OpenID provider to serve the authentication tokens.

- Two similar open-source projects exist to intercept requests from pods to the metadata endpoint and return temporary IAM credentials with scoped permissions: kiam and kube2iam. kiam seems to be a little more secure, offering the ability to limit the AWS IAM permissions to server pods, which should therefore be strongly isolated like the controllers mentioned above.

- Use a service like Hashicorp Vault, which can dynamically manage AWS IAM access and now supports Kubernetes pod secret injection.

(Skip this step if you are using kiam or kube2iam.) Use Network Policies to block pod egress to the metadata endpoint after installing the Calico CNI. You can use a Kubernetes NetworkPolicy resource type, but note these resources would need to be added to every namespace in the cluster.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-instance-metadata

namespace: one-of-many

spec:

podSelector:

matchLabels: {}

policyTypes:

- Egress

egress:

- to:

- ipBlock:

cidr: 0.0.0.0/0\t# Preferably allow list something smaller

except:

- 169.254.169.254/32However, Calico offers some additional resource types, including a GlobalNetworkPolicy which applies to all the namespaces in the cluster.

apiVersion: crd.projectcalico.org/v1

kind: GlobalNetworkPolicy

metadata:

name: deny-instance-metadata

spec:

types:

- Egress

egress:

- action: Deny

destination:

nets:

- 169.254.169.254/32

source: {}Step-by-Step Instructions for Isolating Critical Add-ons

This section will use the Kubernetes cluster autoscaler service as an example for how to deploy privileged add-ons with some additional protections. The EKS documentation for installing the cluster autoscaler, which can dynamically manage adding and removing nodes based on the cluster workload, does not always follow best practices for either cluster or EC2 security. The alternative shown below will yield more security, partially by mimicking the protected nature of master nodes in a standard Kubernetes cluster.

Note that the cluster autoscaler service can only manage scaling for the cluster in which it is deployed. If you want to use node autoscaling in multiple EKS clusters, you will need to do the following for each cluster.

- The recommended IAM policy for the cluster autoscaler service grants permission to modify all the autoscaling groups in the AWS account, not just the target cluster’s node groups. Use the following policy instead to limit the write operations to one cluster’s node groups, replacing the CLUSTER_NAME in the Condition with your cluster’s name. (The Describe* operations cannot be restricted by resource tags.)

{

"Version": "2012-10-17",

"Id": "LimitedClusterAutoscalerPermissions",

"Statement": [

{

"Sid": "RestrictByTag",

"Action": [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup"

],

"Resource": "*",

"Effect": "Allow",

"Condition": {

"StringLike": {

"autoscaling:ResourceTag/kubernetes.io/cluster/CLUSTER_NAME": "owned"

}

}

},

{

"Sid": "TagConditionNotSupported",

"Action": [

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeTags",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": "*",

"Effect": "Allow"

}

]

}- Create a separate node group for the privileged controllers. Using nodes that are not shared with unprivileged user workloads will help restrict access to these additional AWS IAM permissions and will help safeguard critical system workloads like the autoscaler service.

- Use a node taint not used for other node groups. (Managed node groups do not support automatic tainting of nodes, so either use a self-managed node group or automate a way to taint this group’s nodes when they join the cluster.) This example uses a taint with key=privileged, value=true, of type NoSchedule.

- Add a label for the nodes in the form

node-group-name=<name of privileged node group> - Attach the above IAM policy to the IAM role used by the node group’s EC2 instance profile, or, preferably, set up one of the alternative IAM credential delivery methods described above.

- Make sure the autoscaling groups for the node groups that need to be managed by the cluster autoscaler have the following tags (

eksctlwill add these automatically when it creates node groups):k8s.io/cluster-autoscaler/<cluster-name>: ownedk8s.io/cluster-autoscaler/enabled: true

- Find the latest patchlevel of the cluster autoscaler version that matches your EKS Kubernetes version here. Note that the major and minor versions of the cluster autoscaler should match, so Kubernetes 1.14 will use a cluster autoscaler version starting with “1.14.” You will use this version number in the next step.

- Save the following script to a file and set the variables at the top to the appropriate values for your cluster. You may need to make further edits if you use a different node taint or node group label than the examples in step 2.

#!/bin/bash

set -e

# Set CLUSTER_NAME to the name of your EKS cluster

CLUSTER_NAME=<cluster name>

# Set PRIVILEGED_NODE_GROUP to the name of your target node group

PRIVILEGED_NODE_GROUP=<node group name>

# Set AUTOSCALER_VERSION to the correct version for your EKS

# cluster Kubernetes version

AUTOSCALER_VERSION=<version string>

# Deploy

kubectl apply -f \

https://raw.githubusercontent.com/kubernetes/autoscaler/master/luster-autoscaler/cloudprovider/aws/examples/luster-autoscaler-autodiscover.yaml

# Update the container command with the right cluster name

# We don't use kubectl patch because there is no predictable way to patch

# positional array parameters if the command arguments list changes

kubectl -n kube-system get deployment.apps/cluster-autoscaler -ojson \

| sed 's/\\u003cYOUR CLUSTER NAME\\u003e/'\"$CLUSTER_NAME\"'/' \

| kubectl replace -f -

# Annotate the deployment so the autoscaler won’t evict its own pods

kubectl -n kube-system annotate deployment.apps/cluster-autoscaler \

--overwrite cluster-autoscaler.kubernetes.io/safe-to-evict=\"false\"

# Update the cluster autoscaler image version to match the Kubernetes

# cluster version and add flags for EKS compatibility to the cluster

# autoscaler command

kubectl -n kube-system patch deployment.apps/cluster-autoscaler -type=json \

--patch=\"$(cat <<EOF

[

{

"op": "replace",

"path": "/spec/template/spec/containers/0/image",

"value": "k8s.gcr.io/cluster-autoscaler:v${AUTOSCALER_VERSION}"

},

{

"op": "add",

"path": "/spec/template/spec/containers/0/command/-",

"value": "--skip-nodes-with-system-pods=false"

},

{

"op": "add",

"path": "/spec/template/spec/containers/0/command/-",

"value": "--balance-similar-node-groups"

}

]

EOF

)"

# Add the taint toleration and the node selector blocks to schedule

# the cluster autoscaler on the privileged node group

kubectl -n kube-system patch deployment.apps/cluster-autoscaler

--patch="$(cat <<EOF

spec:

template:

spec:

tolerations:

- key: "privileged"

operator: "Equal"

value: "true"

effect: "NoSchedule"

nodeSelector:

node-group-name: ${PRIVILEGED_NODE_GROUP}

EOF

)"- Check to make sure the cluster-autoscaler pod in the kube-system namespace gets scheduled on a privileged node and starts running successfully. Once it is running, check the pod’s logs to make sure there are no errors and that it can successfully make the AWS API calls it requires.

- Do not allow user workloads to add a toleration for the taint used by the privileged nodegroup. One method to prevent unwanted pods from tolerating the taint and running on the privileged nodes would be to use an admission controller like the Open Policy Agent’s Gatekeeper.

To complete protection of the IAM credentials on all your cluster’s nodes, use kiam, kube2iam, or IAM role for EKS service accounts (the last option requires configuring a OpenID Connect provider for your AWS account) to manage scoped credentials for the cluster autoscaler and other workloads that need to access AWS APIs. This would allow you to detach the IAM policy above from the node group’s instance profile and to use a Network Policy to block pod access to the EC2 metadata endpoint to protect the node’s IAM credentials.

The recommendations in this section only apply if you use or are considering installing privileged cluster add-ons. Even without the additional tasks required to deploy these safely to protect their AWS credentials, managing add-ons in EKS can carry a good deal of operational overhead. Investing the time to perform these additional recommended steps will protect your cluster and your AWS account from misconfigured applications and malicious attacks.

Even if you do not use any add-ons, but you do have workloads which need to access AWS APIs, be sure to apply a credential management option from Manage IAM Credentials for Pods.